Many organizations rely heavily on infrastructure-as-code (IaC) tools like Terraform or OpenTofu. These tools often involve large, complex configurations composed of hundreds of interdependent modules, sometimes referred to as ‘terraliths’, that can be error-prone and difficult to manage.

When managing infrastructure with IaC, companies must also manage the IaC tools used to configure said infrastructure. Teams can often struggle to maintain consistency across environments, resulting in more time spent firefighting than delivering business value.

Agentic infrastructure solves this problem. Its intelligent orchestration layer works alongside your IaC, configuration management, CI/CD pipelines, and container orchestrator tools, amplifying their effectiveness.

In this post, we’ll walk through the current state of infrastructure management, discuss how agentic infrastructure enhances it, and present some concrete implementation strategies for using agentic infrastructure alongside your traditional approach.

The current state of infrastructure

For the majority of modern infrastructure teams, the management approach is the same:

- – Use an IaC tool like Terraform, OpenTofu, or Pulumi to manage infrastructure resources from network to compute.

- – Implement a CI/CD pipeline or an infrastructure orchestration platform (GitHub Actions, Jenkins, Terraform Cloud, Pulumi Cloud) to handle deployments.

- – Adopt Kubernetes or another container orchestration platform to ensure all containers are running smoothly.

- – Monitor with tools like Datadog, Prometheus, Grafana, or the Elastic Stack (Elasticsearch, Logstash, and Kibana).

While this stack is proven, there are a few issues that arise—and are still hard to solve:

- Managing infrastructure at scale: Teams create modules to reduce duplication, but these can have unintended consequences, slowly transforming into monoliths that nobody dares to modify.

- Speed versus control: Locking down infrastructure changes requiring extensive approvals sacrifices speed, while enabling developer velocity can lead to security vulnerabilities, configuration drift, and higher costs.

- Infrastructure drift: Any manual change not incorporated into IaC is drift. As soon as you deploy again, your infrastructure will revert to its previous state, reintroducing the incident you solved.

To help resolve these problems, agentic infrastructure has emerged as a novel approach that augments your current tech stack.

What is agentic infrastructure?

Agentic infrastructure represents an intelligent orchestration layer that is context-aware, takes autonomous actions (albeit with human supervision in some cases), and learns from its experiences.

Teams implement agentic infrastructure to:

- – Provision environments through natural language interaction

- – Correct drifts by analyzing whether they were intentional or accidental

- – Predict scaling needs

- – Diagnose root causes of failures

- – Attempt remediation procedures to ensure compliance

While traditional automation is deterministic, agentic infrastructure is flexible. For example, with traditional automation, you would see something like “If CPU is over 75%, send an alert to this Slack channel.” But with agentic infrastructure, you would more likely see, “Keep my applications running smoothly.”

Instead of simply executing a predefined condition, an agentic system will see what is happening and then decide what to do based on the broader context: It might increase the instance size, deploy a new node, or provide an entirely different solution.

Enterprise benefits of adopting agentic infrastructure

There are multiple benefits to implementing agentic AI:

- Speed development: Provision infrastructure faster, handing your teams more time to focus on what really matters: delivering new features and new business outcomes.

- Detect infrastructure drift: AI systems can automatically flag drift for human reviews, plus offer the much-needed context required for proper and prompt remediation.

- Accelerate your response to security vulnerabilities: Whenever there is a new CVE announced, AI systems can automatically detect which containers are using the affected image and orchestrate rolling updates across your entire infrastructure.

- Enable cost optimization: With proper guardrails, agentic AI right-sizes your infra components based on data (not estimates), cuts costs by automatically shutting down your non-production environments, and cleans up idle resources.

Integrating your existing infra with agentic AI using Quali Torque

Agentic infrastructure understands your Terraform module dependencies and can dynamically select the appropriate module versions based on your environment requirements. It can also provide unified visibility across all of your Kubernetes clusters and ensure compliance.

Instead of receiving hundreds of firing alerts from your monitoring stack, with agentic AI, you can gain contextual insights about what happened and what the AI did to resolve that particular issue.

The role of EaaS

Modern engineering teams face constant pressure to deliver faster while managing complex cloud infrastructure, where manual environment management introduces bottlenecks and configuration drift.

Environment-as-a-service (EaaS) addresses these challenges by automating provisioning and standardizing configuration. Embedding agentic AI into EaaS takes it to the next level, allowing you to easily define what you want and create a unified platform that consolidates your existing infrastructure tool chain: IaC, configuration management, container orchestration, and even CI/CD pipelines.

This helps you eliminate the cognitive overhead of context-switching between tools, speeds up your deployments, and reduces misconfigurations.

Quali Torque is an EaaS control plane that sits on top of your existing toolchain (Terraform modules, Kubernetes manifests, and CI/CD pipelines). It adds an intelligent orchestration layer that makes these tools more powerful: You describe what you need in natural language, and Torque translates these requests into properly configured infrastructure deployments using your existing Terraform or Kubernetes configurations.

With Torque, you can discover your existing assets by connecting to your Git repositories that support Terraform modules, Helm charts, Kubernetes manifests, and others.

Torque doesn’t force you to rewrite infrastructure, but instead transforms what you already have into managed and orchestrated blueprints.

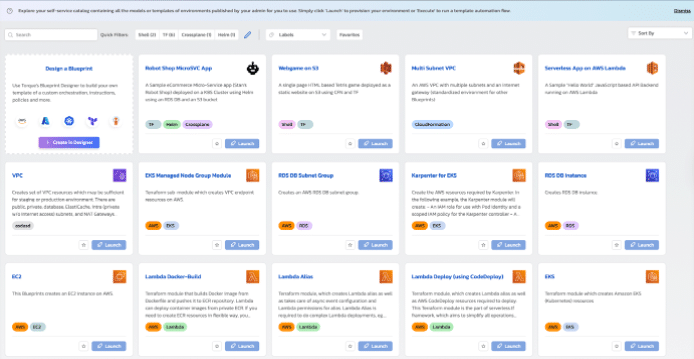

Figure 1: Quali Torque Playground

Torque’s AI Copilot lets you easily describe what you want your environment to look like. This eliminates the need for engineers to perform manual configuration work, reducing your time to environment from hours to minutes.

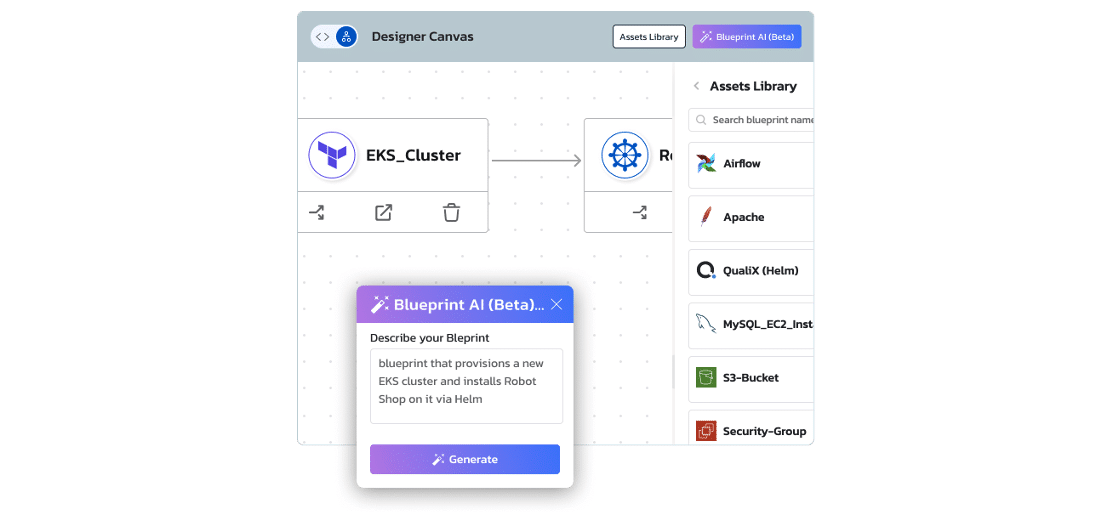

Figure 2: Torque-generated blueprint

Based on your request, Torque pulls the appropriate code from your existing resources into a new blueprint file that includes dependencies, input parameters, and all the details required to create a new environment.

The blueprint is reusable, so whenever you need a similar environment, you can simply launch it from Torque’s self-service catalog. This minimizes operational frictions and frees your team to focus on implementing new features, rather than wasting time building similar infrastructure.

Getting started with agentic infrastructure in 3 steps

When adopting agentic infrastructure, follow this simple process.

- Start in development: Initiating adoption in your development environment provides the perfect testing ground. These environments are low-risk, as failures won’t impact your existing customers, and your teams can experiment without any stress. With this approach, you minimize business disruption while building confidence in the platform and catching misconfigurations before a wider rollout.

- Limit the initial scope: For example, in Torque, you can start by using a single tool, say Terraform, and select only a handful of modules that you want to expose through the agentic AI platform. Based on these modules, use the built-in Copilot to create different blueprints. Next, have a small group of engineers come in and test the provisioning of new environments from Torque’s service catalog, rather than using Terraform commands. This will get you feedback on what’s working and what needs improvement. You can easily show quick wins to your stakeholders while creating internal champions who can advocate for broader adoption of the platform.

- Gradually scale: Over time, increase the number of modules and tools that you’re using, and test these out as well. Features like Torque’s inactivity dashboard let you identify idle resources and measure outcomes such as time saved on environment provisioning or the number of support tickets eliminated. By scaling gradually, you’ll have time to implement best practices and create standardized workflows. This gives you the chance to maximize both speed and control in the long run, even if the process might feel slow initially. After a couple of months, you’ll reach a point where you can deploy new features to your infrastructure fast, plus be confident that these features comply with your organization’s policies.

Note: Being an early adopter has its perks, but you still need to be mindful about how this might impact your existing workflows. Don’t force adoption. Let your engineers experience the benefits of Torque’s approach for themselves.

Key points

Agentic infrastructure is not meant to replace your existing workflows end-to-end. It treats your existing tools as the foundation for intelligent orchestration platforms.

When adopting agentic infrastructure, always start with your lower environments, analyze how they behave, and then gradually implement them further.

Accelerate your infrastructure ops with agentic AI. Visit Torque’s Playground today.