The rapid proliferation of agentic AI, systems composed of autonomous agents operating across distributed environments, represents a foundational shift in how enterprise workloads are built, deployed, and governed. These systems introduce new layers of operational complexity and security risk that traditional cloud management platforms and IT governance models were never designed to handle.

Enterprise infrastructure is being outpaced by the systems it’s meant to support.

A Widening Security and Governance Gap

AI systems increasingly rely on dynamic, ephemeral environments that span public cloud, on-premises hardware, and edge locations. In this context, security is no longer a perimeter function or a static ruleset. Policies must be embedded into the orchestration fabric itself — able to act based on live context, identity, workload type, and lifecycle phase.

Conventional platforms, whether SIEMs, CMPs, or DevOps toolchains, provide fragmented visibility and delayed enforcement. They were built for static environments with predictable lifecycles. Agentic systems break those assumptions by design.

Key challenges include:

- Explosion of policy complexity: Multiple autonomous agents executing in parallel require individualized policy enforcement and telemetry analysis.

- Lack of environmental awareness: Agents operate in contexts with limited visibility into infrastructure state, access control boundaries, or data movement policies.

- Inefficient handoffs: Traditional workflows depend on human mediation and slow CI/CD paths. This model is incompatible with the high-velocity, multi-agent AI execution patterns now emerging.

A recent study by IDC estimates that AI lifecycle management inefficiencies, including environment setup, policy configuration, and orchestration gaps, could cost enterprises over $18 billion annually by 2026 in lost productivity and excess infrastructure spend. These costs are compounded by compliance exposure and time-to-market delays.

A Control Plane Built for Autonomy

Torque from Quali addresses these challenges by reframing the infrastructure problem. Instead of managing workloads via reactive policies or external tools, Torque integrates governance, cost control, and orchestration into a unified platform built explicitly for AI-first environments.

This includes:

- Policy-as-code enforcement at the orchestration layer, not just IaC linting or post-hoc monitoring.

- Runtime-aware infrastructure provisioning, where environments are dynamically configured based on user identity, workload classification, and operational context.

- Agent-to-agent coordination frameworks, enabling secure, context-sensitive interactions between autonomous components and services.

Torque’s architectural model aligns with the principles of Zero Trust and lifecycle governance, not as separate capabilities, but as embedded, enforceable constraints within every blueprint, runtime, and interaction.

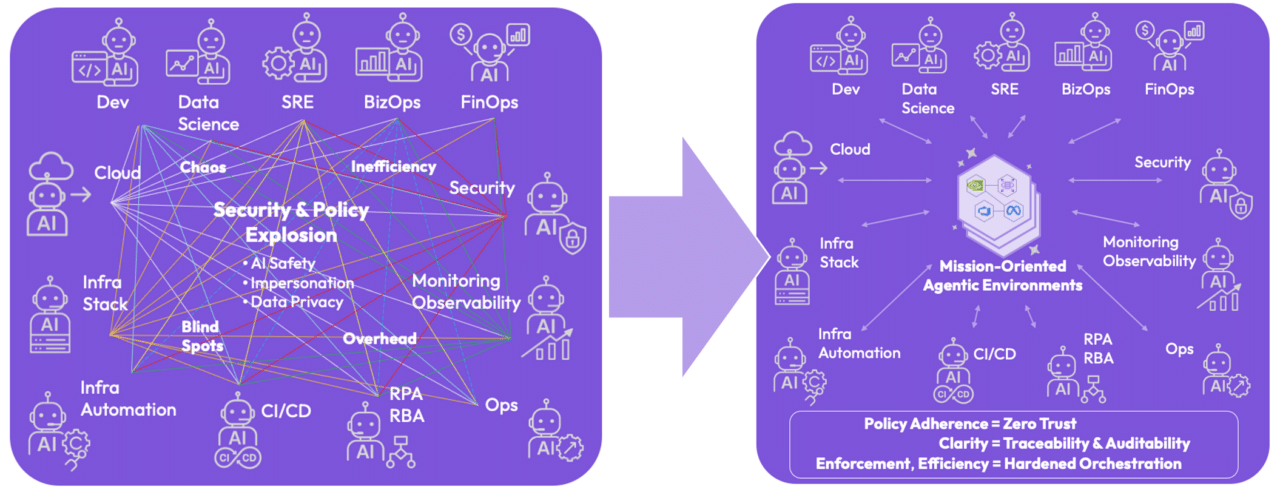

The graphic below, extracted from internal architectural frameworks, clarifies the contrast between traditional orchestration and the demands of coordinated, agentic execution. It may be a useful inclusion to explain Torque’s model of “mission-oriented agent environments.”

The operational chaos of AI at scale without Torque (left) vs. coordinated, policy-aligned agentic environments enabled by Torque (right).

Securing the Future of AI Requires a New Category

The category emerging around Torque, often referred to as an Infrastructure Platform Engineering (IPE) layer, is distinct from both traditional CMPs and developer-focused PaaS models. It does not aim to simplify infrastructure. It orchestrates complexity, by providing a framework that aligns workloads, agents, and environments under centralized governance and optimization controls.

This shift is not theoretical. Organizations in financial services, healthcare, and defense are already encountering the governance fragmentation, data handling risks, and cost overruns that result from attempting to scale AI using outdated infrastructure patterns.

A sustainable, governable AI infrastructure model requires:

- Blueprint-driven orchestration tied to policy and compliance controls

- Runtime enforcement mechanisms with zero-trust access mediation

- Context-aware coordination across autonomous workloads and agents

- Full-stack observability and policy traceability

Torque enables this model today. Its deployment across Fortune 500 companies is already demonstrating tangible reductions in cloud waste, increases in environment reuse, and improvements in operational assurance.

Conclusion

As AI systems become more agentic, infrastructure must become more intelligent. Not intelligent in the sense of adding automation, but in the sense of enabling secure, adaptive, policy-aligned operations across a dynamic, distributed, and accelerating compute landscape.

Further Reading

To explore the concepts discussed here in greater technical and operational depth, refer to:

- Securing the Future of AI – Whitepaper: A comprehensive overview of the shifting security and infrastructure requirements for AI and agentic systems.

- Agentic AI Security Framework: An in-depth look at the ten critical capabilities needed to secure and operationalize multi-agent AI systems at scale.

For those evaluating the practical implications of agentic orchestration and governance, the Torque Playground offers a hands-on environment to experience the platform’s orchestration and policy capabilities.