We’ve all copied someone’s main.tf at 2 a.m., tweaked a few variables, hit terraform apply, and hoped that nothing would catch fire. It works—until the next sprint when you realize the same three-hundred-line script is duplicated across six repos and two clouds.

Over the past decade we’ve seen tooling explode: We’ve got cloud-native service catalogs, low-code deployment buttons, even AI assistants that promise one-click infrastructure. Yet when the incident phone rings at midnight, the only artifact everyone trusts is a well-reviewed Terraform module committed to Git and stamped by CI before the change hits production.

In 2025, we have to navigate pressures from platforms, budgets, and auditors. Careless Infrastructure-as-Code practices simply won’t suffice. The secret to your success lies in crafting Terraform modules with skill and creativity. When we elevate a module from “works on my laptop” to “published artifact,” we create a force multiplier. Junior engineers can provision production-grade networks without memorizing every AWS acronym, and platform teams can enforce cost and security guardrails once instead of chasing every micro-service owner.

This blog post captures what was learned from code reviews and late-night incident calls and transforms them into a handy, opinionated guide to help you write Terraform modules like a pro.

Why mastering Terraform modules still matters in 2025

Even with OpenTofu, Pulumi, Crossplane, and a sea of dashboards competing for attention, Terraform modules remain the backbone of repeatable, auditable cloud automation. Standardizing on a battle-tested module instead of reinventing the wheel enables you to:

- Accelerate delivery by codifying knowledge and best practices.

- Cut drift because the source of truth lives in Git, not on a sticky note.

- Lower spending by incorporating right-sizing and tagging into code rather than waiting for quarterly cost-analysis review emails.

Modules also act as living contracts between teams: Inputs define what’s negotiable, outputs declare guarantees, and version tags chronicle every feature ever shipped. Auditors, SREs, and cost-analysts parse the same source, eliminating tribal knowledge. That shared understanding turns infrastructure discussions from finger-pointing blame games into data-driven conversations that accelerate delivery.

Design principles of production-grade modules

Before opening your code editor, let’s align on the principles that separate disposable code from modules that stand the test of time:

- Explicit inputs, minimal outputs: Type every variable, add validation blocks, and expose only what downstream callers need.

- Version fences: Pin provider ranges, (such as >= 5.40 and < 6– )and required Terraform versions, so upgrades are explicit decisions.

- State isolation: Unique back-end keys per environment prevent developer mistakes from nuking production.

Project scaffolding and conventions that scale

A newcomer should be able to skim the module repository and find precisely where to put a variable or look up an output.

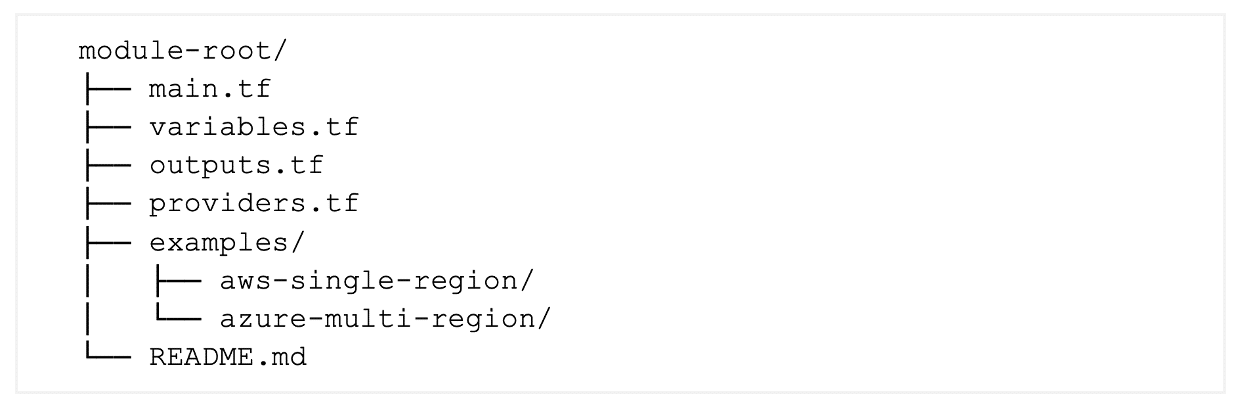

Placing main.tf, variables.tf, outputs.tf, and providers.tf at the root folder makes it instantly clear where the module’s logic, inputs/outputs, and provider pinning live. That makes it easier for a new contributor to navigate without having to hunt through sub-folders. The dedicated examples/ directory lets anyone copy-paste a runnable scenario (AWS or Azure in this case) while keeping demo code out of production paths, and the top-level README.md gives every consumer up-to-date usage instructions at a glance:

Hands-on walk-through: Building a cross-provider networking module

Picture a product team that needs to drop the same microservice into two clouds—today AWS us-east-1, tomorrow Azure west Europe. Instead of shipping two codebases, it is possible to use one Terraform module that flips clouds with a single variable.

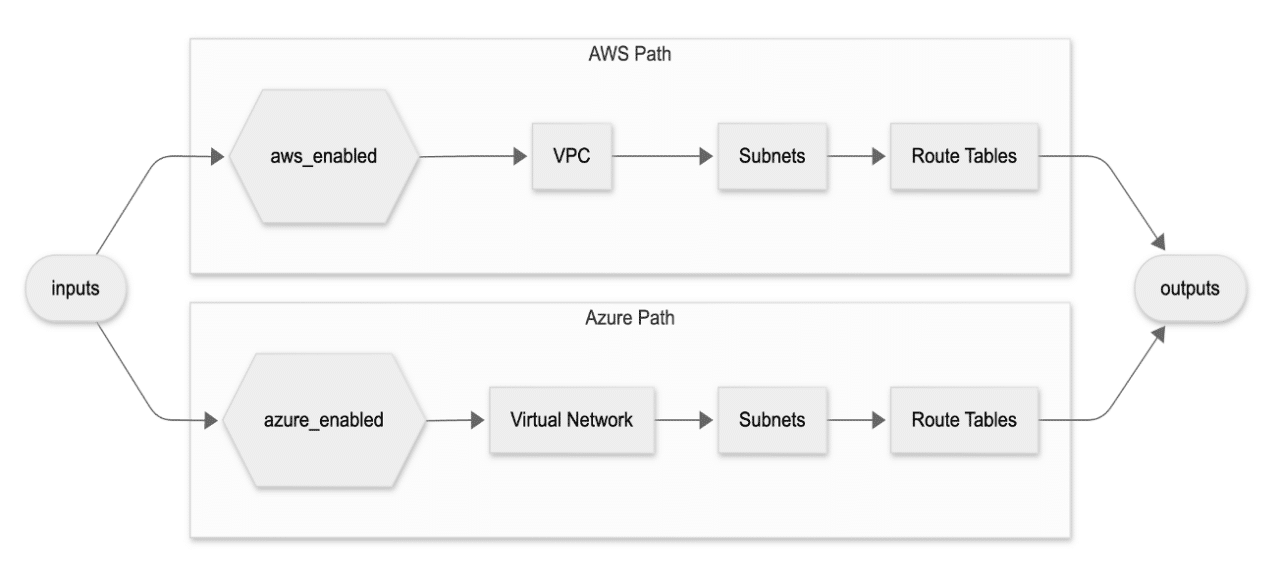

The diagram visualizes how a single input layer fans out into two mutually exclusive paths, each provisioning its own VPC/VNet, subnets, and route tables before converging back into a shared outputs block:

Provider scaffolding with aliases

Pin Terraform and provider versions, so upgrades only happen when you want them to take place. The primary alias then hands every child resource the correct cloud account or subscription automatically:

terraform {

required_version = ">= 1.8"

required_providers {

aws = { source = "hashicorp/aws", version = ">= 5.40, < 6" }

azurerm = { source = "hashicorp/azurerm", version = ">= 3.100, < 4" }

}

}

provider "aws" {

alias = "primary"

region = var.aws_region

profile = var.aws_profile

}

provider "azurerm" {

alias = "primary"

features = {}

subscription_id = var.azure_subscription

}Conditional resources without branching hell

A simple count expression switches each block on or off based on the var.cloud value, letting one module serve AWS or Azure from the same repository. There’s one CIDR variable, zero messy if-else chains:

resource "aws_vpc" "this" {

count = var.cloud == "aws" ? 1 : 0

cidr_block = var.cidr_block

enable_dns_hostnames = true

tags = merge(local.tags, { "module" = "x-cloud-net" })

}

resource "azurerm_virtual_network" "this" {

count = var.cloud == "azure" ? 1 : 0

name = local.name

address_space = [var.cidr_block]

location = var.azure_location

resource_group_name = var.azure_rg

tags = local.tags

}Surface portable outputs

AWS and Azure expose the same output names, hiding their IDs behind the scenes. Callers grab cidr_blocks and subnet_ids without caring which cloud produced them:

output "cidr_blocks" {

value = var.cloud == "aws" ?

aws_vpc.this[0].cidr_block :

azurerm_virtual_network.this[0].address_space[0]

}

output "subnet_ids" {

value = var.cloud == "aws" ?

values(aws_subnet.private)[*].id :

values(azurerm_subnet.private)[*].id

}Advanced patterns to level up your modules

Once the cross-cloud scaffolding feels routine, it’s time to squeeze more power out of Terraform’s language. The patterns below can reduce copy-paste, help share data safely, and bake security, cost, and quality gates straight into every merge.

Map-driven resources with for_each and dynamic blocks

Need a high number of similar resources that change every sprint? Let a map drive the loop so you edit variables, not HCL. The for_each variable iterates over the map, and a dynamic block fans out the ingress rules, so adding or removing a port is a one-line variable change:

variable "sg_matrix" {

type = map(object({

port = number

protocol = string

description = string

}))

default = {

http = { port = 80, protocol = "tcp", description = "Web" }

https = { port = 443, protocol = "tcp", description = "TLS" }

}

}

resource "aws_security_group_rule" "matrix" {

for_each = var.cloud == "aws" ? var.sg_matrix :

{}

type = "ingress"

security_group_id = aws_security_group.main.id

from_port = each.value.port

to_port = each.value.port

protocol = each.value.protocol

description = each.value.description

cidr_blocks = ["0.0.0.0/0"]

}Consume remote state safely

Sometimes, you need to reference infrastructure that another team already owns using read-only access. Data resourcing with the terraform_remote_state command pulls outputs from a remote Amazon S3 backend. If you use these values and do not mutate the state, both teams can deploy independently:

data "terraform_remote_state" "network" {

backend = "s3"

config = {

bucket = "terraform-states"

key = "prod/network/terraform.tfstate"

region = "us-east-1"

}

}

resource "aws_eip" "bastion" {

count = data.terraform_remote_state.network.outputs.enable_bastion ? 1 : 0

instance = aws_instance.bastion[0].id

tags = local.tags

}

Shift-left compliance with policy-as-code tooling

You can block destructive configuration options before they ever hit a Terraform plan stage. The following Rego rule runs in OPA Gatekeeper; if a VPC CIDR doesn’t start with 10., the plan halts, and the PR status turns red—with no human review required:

package terraform.vpc_cidr

deny[msg] {

input.resource_type == "aws_vpc"

not startswith(input.values.cidr_block, "10.")

msg = sprintf("VPC CIDR %s must be in 10.0.0.0/8", [input.values.cidr_block])

}Gates for security and costs all in one pipeline

You can run all the scanners and cost calculations in a single GitHub Action, so “green” really means green. The following job lints, sec-scans, tests, and posts an Infracost estimation comment; if any step fails, the merge button stays locked:

name: quality-gates on: pull_request jobs: verify: runs-on: ubuntu-latest steps: - uses: actions/checkout@v4 - uses: hashicorp/setup-terraform@v3 - run: terraform init -backend=false - run: terraform validate -no-color - run: tflint --recursive - run: checkov -d . --quiet - run: infracost breakdown --path . --format json --out-file infracost.json - uses: infracost/actions/comment@v2

Automation and advanced tooling can turn “module madness” into velocity

Even when your Terraform modules are flawless, turning them into a self-service platform using the strategies outlined above can still require hours and hours of scripting and ticket handling. This is where automation and advanced tooling can make a significant difference, helping you bridge the gap between best practices and effortless implementation.

One way you can do that is with a platform like Quali Torque to help automate the chores that live between a Git tag and a running environment. It can provide:

● Instant IaC from live clouds

Cloud Curate inventories every VPC, VM, subnet, and database it finds, then spits out ready-to-commit Terraform code that mirrors the exact settings in place.

● Normalized tags and catalogs

Quali Torque ingests generated files, applies organization-wide tags, versions them, and surfaces a searchable module library that any squad can reuse.

● Generative AI blueprints to orchestrate

Torque’s AI Blueprint Designer lets you describe an outcome—such as “high-availability EKS plus Redis in a private subnet” for instance, and automatically wire the necessary modules, parameters, and dependencies. Under the hood, it discovers what’s in our repos, chooses the right modules, and writes the glue code you usually craft by hand, shaving days off every project kickoff.

● Governance, RBAC, and cost optimization

All launches go through Torque’s service catalog. This means that rules about limited access, location restrictions, and shut down schedules for unused services apply automatically. FinOps dashboards flag oversize instances and orphaned volumes in real-time, while role-based access controls keep shadow IT in check.

Taken together, these layers mean you spend your energy refining Infrastructure-as-Code best practices while Torque does the heavy lifting.

Ready to turn your Terraform modules into instant, governed environments? Explore how you can elevate your IaC operations visit the Torque Playground and start your free trial of Quali Torque today.